Server management on behalf of the client is a fairly common service today, complementing the portfolio of many software houses and outsourcing companies. There are still, however, two types of companies on the market: those that provide high-quality services (with competences) at an attractive price (achieved thanks to synergy, not thanks to crude cost-cutting), and… others. If you’re a user of such services — have you ever wondered, which type of supplier you currently use?

Question of quality

All of us are and have been customers many times, and we have a better or worse idea of what high quality is. Often we simply identify it with the satisfaction from the service. The problem arises in case of more advanced services, or those that we have only been using for a short period of time, or even for the first time — and we don’t know what we should really expect from a professional service, and what is only the solid mediocrity.

Let’s think about the criteria of professionalism in the case of server management services. In 2018 — not in 2008 or 1998. The first answer that comes to mind is, of course, “customer satisfaction.” The thing is, this satisfaction is a subjective matter, relative, for example, to support reaction times or other variable parameters derived from purchased hosting plan — or even subjective feelings from conversations with a support engineer (people can feel sympathy for each other or not).

In 2018, another completely objective parameter is absolutely critical: security. In fact, this is why the management of servers is entrusted to specialists, instead of, for example, a full-time programmer who, after all, also knows how to install Linux, so that our clients’ data is safe.

How to provide high-quality services

The question arises, however, on the part of the supplier: how to provide high-quality services (and therefore with maximum pressure on security) at competitive market prices, while paying relatively high wages to employees (in Poland today we have an employee market, especially in IT, which imposes high rates) and still make money on these services?

The answer is very simple and complicated at the same time. It’s simply choosing the right tools, that fit your real business model. This seemingly simple answer is complicated, however, when we begin to delve into details.

The key to the correct selection of tools is the understanding of your own business model at the operational level, i.e. not at the level of contracts and money flow, or even at the level of marketing and sales strategy, but at the level of actual work hours, and possible synergies between analogical activities for different clients. Server management doesn’t have the characteristic of a production line, on which all activities are fully reproducible — the trick is to find certain patterns and dependencies, on the basis of which one can build synergy and choose the right tools.

Instead, unfortunately, most companies that provide good quality server administration services, go to one of two extremes that prevent them from building the synergy, which leads them to high costs. As a result, these services aren’t perceived by their boards as prospective and in time become only a smaller or larger addition to these development services. This, in the long run, leads to self-suppression of good-quality services from the market, in favor of services of dubious quality. But let’s go back to the extremes mentioned. It can go in one of the following directions:

- Proprietary, usually closed (or sometimes “shared source”) software for managing services. At the beginning, meeting the company’s needs perfectly, over time, however, these needs change, because the technology changes very quickly and the company itself is evolving. As a result, after 3-4 years, the company stays with the system which isn’t attractive for potential employees (because the experience gained in such a company isn’t transferable to any other company), and secondly, it requires constant and increasing expenditures for maintenance and “small development.”

- Widely-known software, often used and liked, or at least recognized by many IT people, only… it fits someone’s imagination about their business model, instead of the real one. Why? The reason is very simple: most of popular tools are written either for large companies managing homogeneous IT infrastructure (meaning that many servers are used for common purposes, have common users, etc.), or for hosting companies (serving different clients but offering strictly defined services).

Open source tools

Interestingly, as of 2018, there are still no widely known, open-source tools for heterogeneous infrastructure management, involving support for different owners, different configurations, installed services and applications, and, above all, completely different business goals and performance indicators. Presumably, because authors of such tools don’t have any interest in publishing them as open-source, decreasing potential profit. All globally used tools (eg. Puppet, Chef, Ansible, Salt and others) are designed to manage homogeneous infrastructure. Of course, you can run a separate instance of one of these tools for each client, but it won’t scale to many clients and won’t build any synergy or competitive advantage.

At this point, it’s worth mentioning how we dealt with it in Espeo. Espeo Software provides software development services to over 100 clients from around the world. For several dozen clients, these services are supplemented by management of both production and dev/test servers, and overall DevOps support. It’s a very specific business model, completely different from eg. web hosting company or a company that manages servers for one big client — at least at the operational level.

Therefore, one should ask what are the key factors in such a business model to build synergies — and, above all, on top of what should this synergy be built, so that it’s not synergy at the expense of professionalism. In the case of Espeo, we decided on a dual-stack model, in which, in simplified terms, server support is divided into infrastructure and application levels. This division is however rather conceptual than rigid since both these levels overlap themselves in many aspects.

This division, however, provides the basis for building synergies at the infrastructure level, where, unlike at the application level, the needs of very different clients are similar: security. At the infrastructure level, we use the open-source micro-framework Server Farmer, which is actually a collection of over 80 separate solutions, closely related to the security of the Linux system and various aspects of heterogeneous infrastructure management based on this system.

The Server Farmer’s physical architecture is very similar to the Ansible framework architecture, which is used by us at the application level. Thanks to the similar architecture of both tools, it’s possible, for example, to use the same network architecture on both levels and for all clients. Most of all, however, we’re able to build huge synergy in the area of security thanks to the change from the management of separate contracts (which, in the era of tens of thousands of different machines searching the whole Internet for vulnerabilities and automatically infecting computers and servers, is simply a weak solution) to the production line model, ensuring the right level of security for all clients and all servers.

Building synergy

An example can be taken from the process of regularly updating the system software on servers, which in 2018 is an absolutely necessary process to be able to talk about any reasonable level of security at all. Modern Linux distributions have automatic update mechanisms, however, the only software elements updated are those which won’t cause disruptions in the operation of the services. All the rest should be updated manually (i.e. through appropriate tools, but under the supervision of a person).

And here is the often-repeated problem in many companies: using tools that are known and liked by employees, even if these tools don’t fit the company’s business model and don’t speed up this simple operation in any way.

Let’s imagine a company that supports IT infrastructure for e.g. 50 clients, using 50 separate installations of Puppet, Chef Ansible or, which is even worse, a combination of these tools. As a result, we manage the same group of employees-administrators 50 times, we plan the architecture of the system 50 times, we start to analyze logs 50 times, etc. It’s, of course, feasible and in itself doesn’t lead to lowering of the security level. However, in such a model it’s impossible to use the employees’ time effectively, because with 50 separate installations most of this time is consumed on simple, repetitive and easy to automate activities, and on configuring the same elements, just in different places. What follows is that any business conducted this way isn’t scalable and leads to gradual self-marginalization.

This mistake, however, isn’t due to poor orientation or bad intentions of these companies. It’s simply because open source tools for managing the heterogeneous infrastructure of appropriate quality are relatively specialized software, and as a result, the knowledge of such software by potential employees is quite rare. What’s more, many companies decide to create dedicated instances of Puppet or Ansible for each client, because their undeniable advantage from the perspective of the employee is the transferability of experience between successive employers – even if for the employer himself it means the lack of scalability of the process.

If we look at it from the point of view of building synergy and as a result of permanent business advantage, however, the selection of tools to only satisfy the current and short-term HR needs is a weak idea. A much better attempt is to build a compromise between “employability” and the scalability of the employees’ work. This is why in our dual-stack approach, with the help of the Server Farmer, each employee responsible for infrastructure level management can manage approx. 200 servers per daily-hour (an hour spent each working day).

That means one theoretical job position, understood as the full 8 hours worked during each working day (i.e. full 168 hours per month), can support approx. 1,600 servers in the entire process of maintaining a high level of security (including daily review of logs, uploading software updates, user and permission management, and many other everyday activities). Of course, the real, effective workday of a typical IT employee is closer to five than eight hours a day, nevertheless, the theoretical eight hours is the basis for all comparisons. If you already use server management services, ask your current provider, how many servers is each employee able to support without the loss of quality…

Check out Expertise

Whether you need support for your single-server MVP, or you want to scale up your application to thousands servers – our unique approach gives you the best time-to-market and full transparency.

Security isn’t everything

But, of course, security isn’t everything. After all, nobody pays for maintaining servers only so they are secure as such, but to make money on them. And money is earned by the application level, which is by nature quite different for each of the clients. These differences make building synergies between individual client activities so hard, that in practice, there is no sense in doing it since easy hiring is more important than micro-synergies. That’s why in Espeo we use Ansible at this level, as it’s compatible with the Server Farmer, and at the same time, it’s widely known and ensures the inflow of employees.

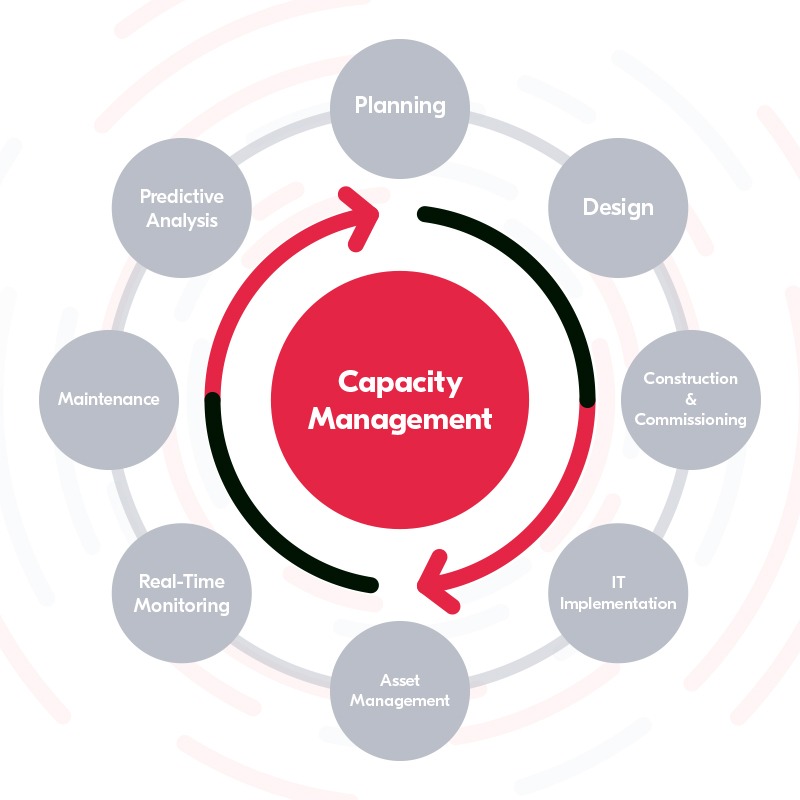

Of course, for such a dual-stack solution to work properly, it’s necessary to set clear limits of responsibility, so that on the basis of these limits, as well as on the basis of SLA levels or other parameters bought by individual customers. It’s possible to build specific application solutions, and the actions of individual employees don’t overlap. Only then it will be possible to build effective capacity management processes (modeled after ITIL), providing each customer with high-quality services in a repeatable and predictable manner.

In part two we’ll describe more technically, what particular solutions we use, in what architecture and how these solutions apply to business processes, and what processes we use for PCI DSS clients.

Estimate your project

Do you have a creative idea? Give us just a little more details and we will get back to you with a tailored offer!