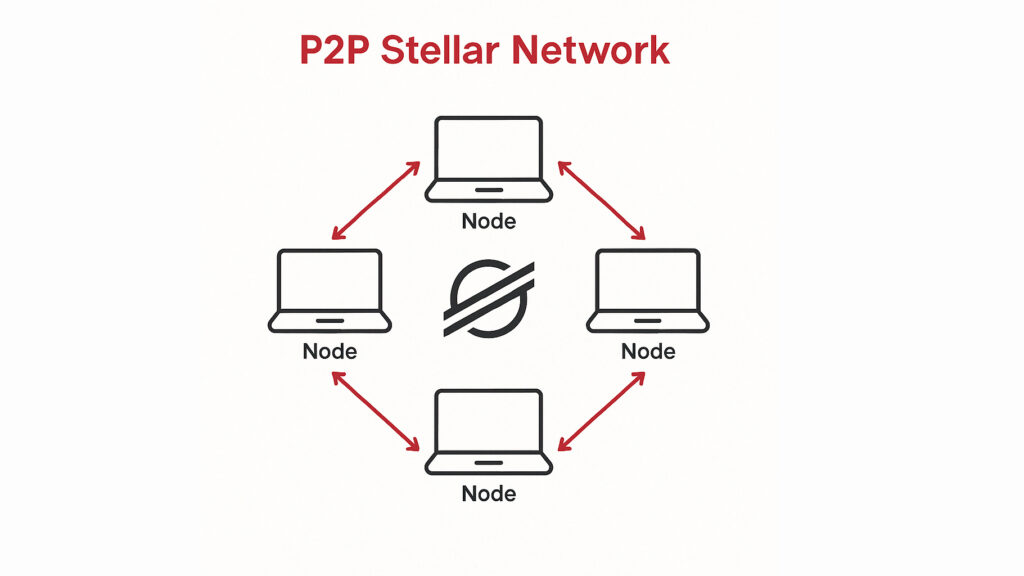

How to build a seamless Stellar peer-to-peer payments app

Blockchain technology remains a niche interest in developed markets. However, the benefits of frictionless cross-border payments are increasingly evident in regions with limited financial services. Early applications—such as cryptocurrencies—were the first to demonstrate blockchain’s potential, and they remain among the most compelling. As the world globalises and the demand for cross-border payments increases, innovation in […]

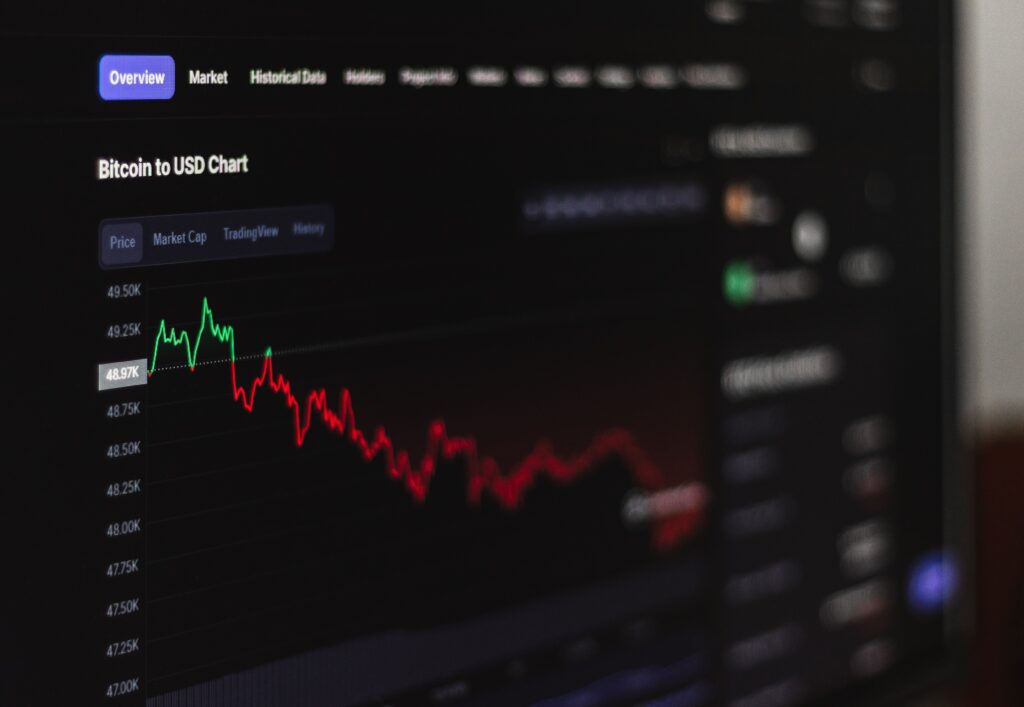

6 pitfalls to avoid in blockchain application development

Knowing your target audience is important to launch a successful dApp. With any dApp blockchain technology should form the basis of a product and deliver utility to end-users. So many blockchain application development projects so far have focused on the buzzwords and not on how the product will actually work. When people ask me about […]

Blockchain ecommerce is keeping the secondary luxury market honest

Florian Martigny, founder of Hong Kong-based platform Luxify plans to launch a new standard in blockchain ecommerce. Espeo Blockchain consultants laid out ways the company can leverage the technology and corner the pre-owned luxury goods market. We’d like to share how we did it and what we learned from the project. If you’re a bargain hunter with […]

Blockchain in healthcare: how the technology could fix the industry in 2020

If you’ve spent any amount of time in a hospital, you’ve had first-hand experience with the inefficiencies of the healthcare system. Just about everyone has a story or two about red tape. More than being irritating, this friction raises prices, lowers patient satisfaction, and can even put people’s health at risk. Many tout the benefits […]