Show me your Definition of Done & I’ll tell you who you are

Analyzing a company’s Definition of Done (DoD) can tell you a lot about their work ethic and what they put the ‘quality’ sticker on. I’ll explain a few concepts about and around DoD to make it clearer. First question: do they have a DoD? There are some vendors who don’t have a DoD at all. […]

Generating presentations using Google Slides API

Imagine you need to create plenty of presentations that look the same, but have a slightly different content, like text or images. Well, you can do it all by hand if you have tons of time and find relaxation in doing repeatable tasks over and over again. However, today I’ll give you a better solution. […]

Docker for Mac: Performance Tweaks

Are you a Linux user who switched to Mac when you saw that Docker is now available as a native Mac app? Or maybe you’ve heard how great Docker is and you want to give it a try? Did you think that you could just take your Docker Compose file, launch your project and have […]

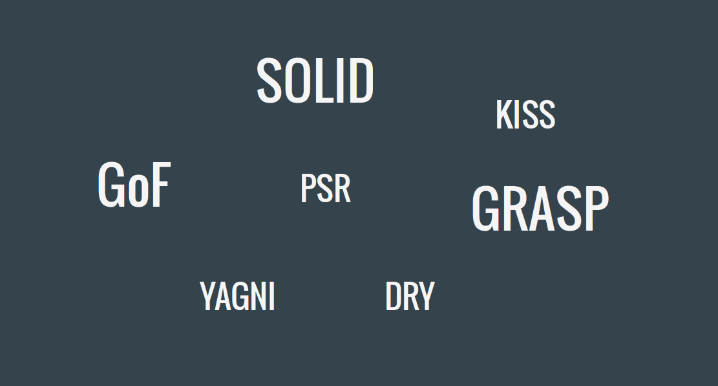

A Short Guide to Object Oriented Design

When building a house, you want it to be well designed. It’s great if someone’s tested the solutions you intend to use, and if they’re universal. For example, a standard window is cheaper than windows in various odd shapes and sizes. You don’t have to use expensive, custom-designed hinges to be able to open them. […]