[Infographic] Why a Product Owner is Better Than a CTO

![[Infographic] Why a Product Owner is Better Than a CTO](https://espeo.eu/wp-content/uploads/2016/09/infogra2_100.jpg)

We’ve written a longer article on why having a CTO is greatly overrated when it comes to both current and new product development. Startups simply need a PO much more. We’d like to show you the main arguments on a handy infographic. New product development with a PO A Product Owner is an indispensable part […]

Using DataTables – Simple and Fast Data Listing

Looking for something to speed up your work with tables? You can use a simple jQuery plugin named DataTables. It’s a highly flexible tool that adds advanced interaction controls to any HTML table. I’ll show you how to use it. Problem: sometimes working with lists (such as product lists) just takes too long. Everything is […]

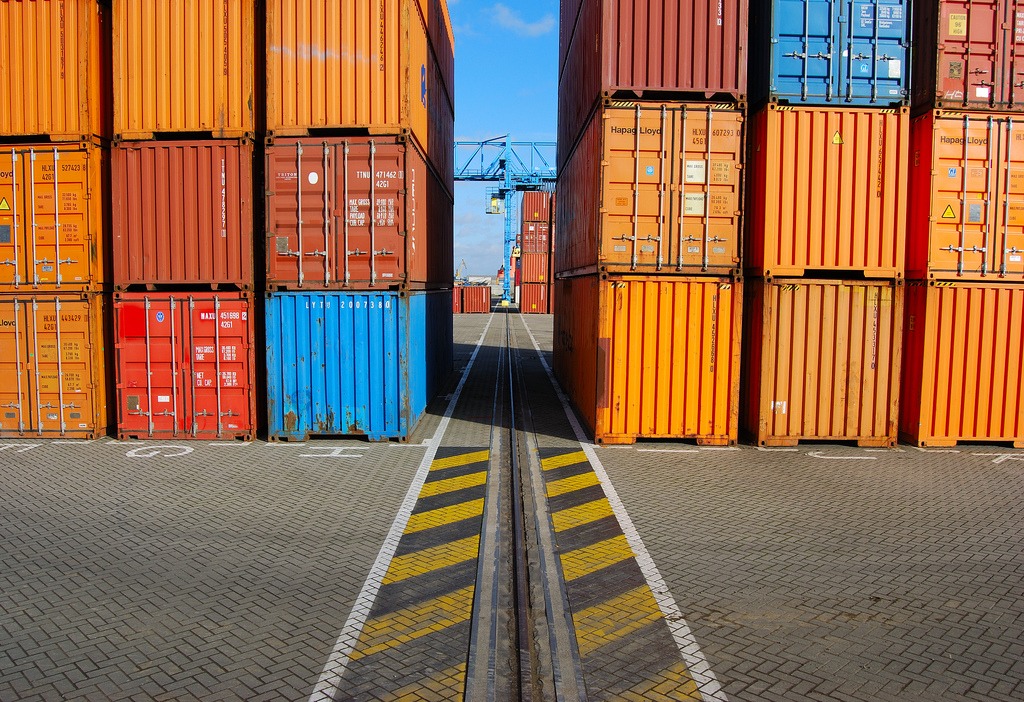

How to Build a Development Environment with Docker

Building a reliable and comfortable development environment is not an easy task. Running multiple versions of the same software might be a bit tricky. This post shows how to build an awesome development environment with Docker. Docker is an open-source platform that automates the development of applications inside software containers.What are the advantages of using […]

ElasticSearch: How to Solve Advanced Search Problems

A few months ago me and my team were faced with a challenge: to provide an advanced search engine with many simple (and more complicated) criteria and an ability to use a full text search mechanism. In addition, we knew that our client demanded high efficiency and scalability. I’d like to focus on full text […]