A day in the life of a Node.js developer

Have you ever wondered what a programmer does on a daily basis? In the following article, we will dispel all your doubts! We invited Michał, Wojtek and Bartek, three of our Node.js developers, to share insights into their everyday professional life and the projects they are working on. You will find all the answers to […]

Java is dominating the programming landscape

The thesis might seem controversial in 2021 when in many rankings such as TIOBE, Java seems to be slowly overtaken by languages such as Python. However, despite the changes due to growth of areas in IT in which Java has never dominated (for example data analysis), there is no premise that in systems where this […]

Welcome Pack at Espeo Software: a box loaded with our culture and values

In many companies a welcome pack has become a standard procedure aimed at making the new employee’s first day in the company more attractive and pleasant. At Espeo Software, we strive to give something more than just standard gadgets – our aim (apart from achieving team affiliation) is conveying the culture and values that guide […]

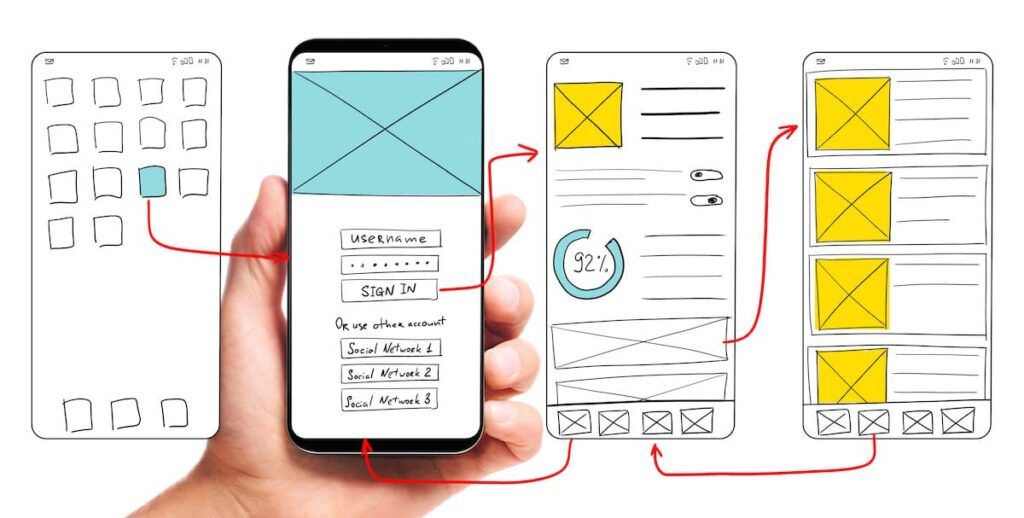

Low code no code movement: what’s that, why it’s important and how it can affect developers and IT companies

Low code no code movement is on fire right now. You can ask why? The answer is simple – the way developers build simple things is changing faster than you think. We assume that this hype is the real deal and soon there will be another branch of employees on board at different IT companies […]