In this tutorial, I’ll describe how you can create your own error watcher. The final version of this project can be found on GitHub. I’ll later refer to the code from this repository, so you can check it out.

Why use even a simple error monitoring tool?

If your application is on the production or will be in near future, you should look for some kind of error monitoring tool, otherwise you’ll have a really big problem. And as a developer, let me tell you that looking for errors manually in your production environment isn’t cool.

Find the cause of the problem before your customers notice

For example, let’s say your application is doing some background processing which isn’t visible at first glance to the end user. The process fails at the one of the background steps. If you have an error monitoring tool, you’ll have the possibility to fix the bug before your customers notice it.

Reduce time of finding to fix time

Without a monitoring tool, when a bug is reported your team would probably start looking through logs manually. This significantly extends the fix time. Now, imagine that your team gets a notification right away when the error appears – you can now skip that time-consuming part.

Monitoring infrastructure

In this tutorial, we’ll use Elasticsearch + Logstash + Kibana stack to monitor our application. ELK is free when you use Open Source and Basic subscriptions. If you want to use custom functionalities, e.g. alerting, security, machine learning, you’ll need to pay.

Unfortunately, alerting isn’t free. If you’d like to send an alert message to a Slack channel or email someone about a critical error, you’ll need to use “semi-paid” X-Pack. Some parts are free in Basic subscription.

However, we can implement our own watcher to bypass Elastic’s high costs. I’ve got a good news for you – I’ve implemented it for you already. We’ll get back to it later.

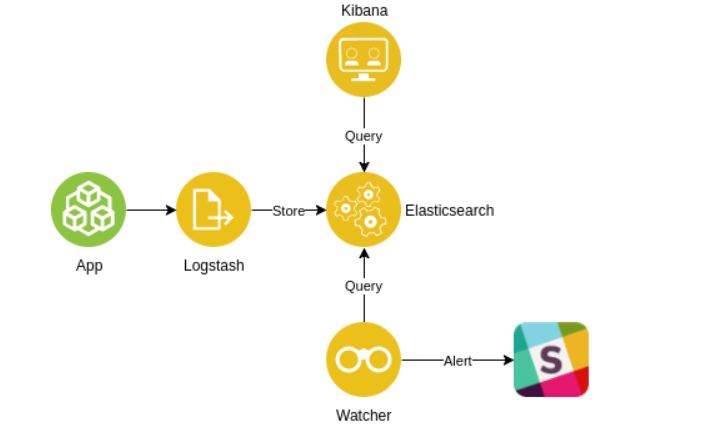

The image below describes how our infrastructure is going to look like.

Logstash reads the logs, extracts the information we want, and then sends transformed data to Elasticsearch.

We will query Elasticsearch for recent logs containing error log level using our custom Node.js Elasticsearch Watcher. The Watcher will send alert messages into a Slack channel when the query returns some results. The query will be executed every 30s.

Kibana is optional here, however it’s bundled in the repo so if you’d like to analyze application logs in some fancy way, here you go. I won’t describe it in this article, so visit the Kibana site to see what you can do with it.

Dockerized ELK stack

Setting up Elasticsearch, Logstash and Kibana manually is quite boring, so we’ll use an already Dockerized version. To be more precise, we’ll use Docker ELK repository which contains what we need. We’ll tweak this repo to meet our requirements, so either clone it and follow the article or browse the final repo.

Our requirements:

- Reading logs from files

- Parsing custom Java logs

- Parsing custom timestamp

We’re using Logback in our project and we have a custom log format. Below, you can see the Logback appender configuration:

Here are the sample logs:

Firstly, we need to update the docker-compose.yml file to consume our logs directory and custom patterns for Logstash. The Logstash service needs two extra lines in its volume section:

The first line binds our pattern directory. The second attaches logs to container. $LOGS_DIR variable will later be added to an .env file, which will give us ability to change logs dir without modifying the repository. That’s all we need.

If you’d like to persist data between container restarts, you can bind Elasticsearch and Logstash directories to some directory outside the Docker.

Here’s the .env file. You can replace logs dir with your path.

How to configure Logstash to consume app logs

Logstash’s pipeline configuration can be divided into three sections:

- Input – describes sources which Logstash will be consuming.

- Filter – processes logs e.g. data extraction, transformation.

- Output – sends data to external services

The code above is the full Logstash configuration.

The input section is quite simple. We define basic input properties such as logs path and logs beginning position when starting up Logstash. The most interesting part is the codec where we configure handling multiline Java exceptions. It will look up for beginning by, in our example, a custom timestamp and it will treat all text after till next custom timestamp as one log entry (document in Elasticsearch).

I’ve included a patterns directory, so we can use our custom pattern in multiline regex. It’s not required, you can use normal regex here.

The filter section is the most important part of a Logstash configuration. This is the place where the magic happens. Elastic defined plenty of useful plugins which we can use to transform log events. One of them is Grok, which we’ll use in the monitoring tool.

Grok parses and structures text, so you can grab all fields from your logs, e.g. timestamp, log level, etc. It works like regex. Just define your log pattern with corresponding field names and Grok will find matching values in the event. You can use default Grok patterns or create your own to match custom values.

In our example, we’ll use a custom timestamp, so we need to define a custom pattern. Grok allows us to use custom patterns ad hoc in message pattern. However, we want to use it more than once, so we defined a patterns file which we can include in places where we need the pattern e.g. multiline codec and Grok. If you use a standard timestamp format, just use the default one.

Here’s the pattern file:

The file structure is the same as in other Grok patterns. The first word in the line is the pattern name and rest is the pattern itself. You can use default patterns while defining your pattern. You can also use Regex, if none of the default matches your needs. In our case, the log format is e.g. 20180103 00:01:00.518, so we’re able to use already defined patterns.

In the output section, we define that transformed logs will be sent to the Elasticsearch.

Docker file permissions

One thing that took me some time to figure out was configuration of the file permissions of the logs accessed by dockerized Logstash.

If your logs are created as a user with id 1000, you won’t notice the problem and you can skip this step. However, you’re most likely dealing with quite the opposite. For example, you run your application on Tomcat and the logs are created by the Tomcat user and then bound as a volume to the Logstash container. The Tomcat user is not the first user (1000) in the system, so user id won’t match in the container. Default Logstash image runs as a user 1000, so it can read logs only with permission of user 1000. It doesn’t have access to other users’ files.

The trick here is to switch to root in Docker file and create new group with id matching the log creator group id and then add the Logstash user to it. Then we add the user which runs the container to the group which owns the logs on the server (sudo usermod -a -G <group> <user>). After that, we switch back to the Logstash user for security reasons to secure the container.

Filebeats – log agent

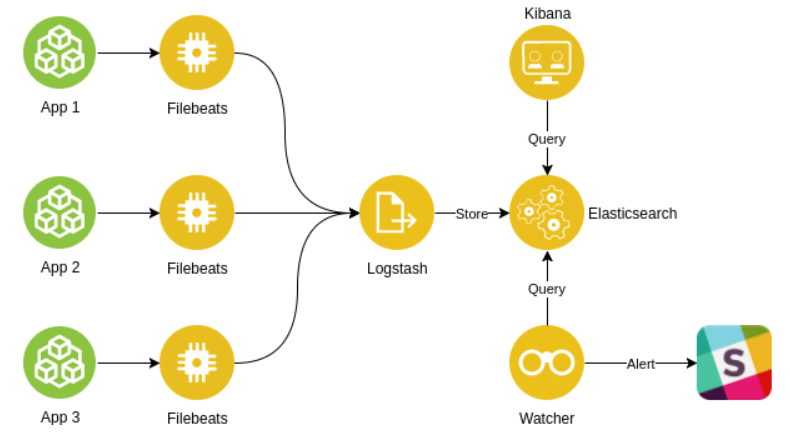

The implementation described so far can be used for one application. We could scale it to support many applications, but it wouldn’t be fun nor easy. Logstash reads lines one by one and sends them after transformation to Elasticsearch.

I’ve got a better solution for you. Elastic created family software called the Beats. One of them is Filebeats which kills the pain of log access. The Filebeats is simply a log shipper. It takes logs from the source and transfers them to Logstash or directly to Elasticsearch. With it, you can forward your data, although it can also do some of the things what Logstash does, e.g. transforming logs, dropping unnecessary lines, etc. But, Logstash can do more.

If you have more than one application or more than one instance of the application, then Filebeats is for you. Filebeats transfers logs directly to the Logstash to port defined in the configuration. You just define where should they look for logs and you define the listening part in the Logstash.

The file permission problem will of course be present if you want to run the dockerized version of the Filebeats, but that’s the cost of virtualization.

I suggest you use Filebeats for production purposes. You will be able to deploy ELK on the server which won’t be actually the prod server. Without Filebeats (with Logstash only) you’ll need to place it on the same machine where the logs reside.

Sending Slack alert message

Elastic delivers the Watcher functionality within X-Pack, bundled into the Elasticsearch and, what’s more, there is an already defined Slack action which can send custom messages to your Slack channel, not to mention more actions. However, as stated before, it’s not free. The Watcher is available in Gold subscription, so if that’s ok for you, then you can skip rest of the article. If not, let’s go further.

When I noticed that the Elastic Watcher is a paid option, I thought that I could do my own watcher which would send alert messages to Slack. It’s just a scheduled job which checks if there’s something to send, so it shouldn’t be hard, right?

The Watcher

I created an npm package called Elasticsearch Node.js Watcher, which does the basics of what the X-Pack’s Watcher does, namely watching and executing actions when specified conditions are satisfied. I chose Node.js for the the Watcher, because it’s the easiest and fastest option for a small app which would do all of the things I need.

This library takes two arguments when creating a new instance of a watcher:

- Connection configuration – it defines connection parameters to Elasticsearch. Read more about it in the documentation.

- The Watcher configuration – describes when and what to do if there’s a hit from Elasticsearch. It contains five fields (one is optional):

- Schedule – The Watcher uses it to schedule cron job.

- Query – query to be executed in Elasticsearch, the result of which will be forward to predicate and action.

- Predicate – tells if action should be executed.

- Action – task which is executed after satisfying predicate.

- Error handler (optional) – task which will be executed when an error appears.

We need to create a server which would start our Watcher, so let’s create index.js with Express server. To make the environment variables defined in .env file visible across Watcher’s files, let’s also include dotenv module.

The meat. In our configuration, we defined to query Elasticsearch every 30 seconds using the cron notation. In the query field we defined the index to be searched. By default, Logstash creates indexes named logstash-<date>, so we set it to logstash-* to query all existing indices.

To find logs, we use Query DSL in the query field. In the example, we’re looking for entries with Error log level which appeared in last 30 seconds. In the predicate field, we’ll define the condition of hits number at greater than 0 since we don’t want to spam Slack with empty messages. The action field references the Slack action described in the next paragraph.

Slack alert action

To send a message to a Slack channel or a user, we needed to set up an incoming webhook integration first. As a result, you’ll get a URL which you should put it in the Watcher’s .env file:

Ok, the last part. Here, we’re sending a POST request to Slack’s API containing a JSON with a formatted log alert. There’s no magic here. We’re just mapping Elasticsearch hits to message attachments and adding some colors to make it more fancy. In the title you can find information about the class of the error and the timestamp. See how you can format your messages.

Dockerization

Finally, we’ll dockerize our Watcher, so here’s the code of Dockerfile:

For development purposes of the Watcher, that’s enough. ELK will keep running and you’ll be able to restart the Watcher server after each change. For production, it would be better to run the Watcher alongside ELK. To run the prod version of the whole infrastructure, let’s add a Watcher service to docker-compose file. Service needs to be added to the copied docker-compose file with a “-prod” suffix.

Then, we can start up our beautiful log monitor with one docker-compose command.

docker-compose -f docker-compose-prod.yml up -d

In the final repository version, you can just execute make run-all command to start the prod version. Checkout the Readme.md, it describes all needed steps.

But…

This solution is the simplest one. I think the next step would be to aggregate errors. In the current version you’ll get errors one by one in your Slack channel. This is good for dev/stage environment, because they’re used by few users. Once you’re on production, you’ll need to tweak Elasticsearch’s query, otherwise you’ll be flooded with messages. I’ll leave it for you as homework 😉

You need to analyze the pros and cons of setting up all of this by yourself. On the market, we have good tools such as Rollbar or Sentry, so you need to choose if you want to use the “Free” (well, almost, because some work needs to be done) or the Paid option.

I hope you found this article helpful.